Understanding The 'Undress AI Remover Tool Free GitHub' Phenomenon

The digital landscape seems to shift and change at a rapid pace, doesn't it? Just when you feel like you have a handle on things, a new technology or a fresh discussion pops up, and you're left trying to figure out what it all means. One such topic that has been generating a lot of talk lately revolves around the idea of an "undress AI remover tool free GitHub." This phrase, honestly, brings up a whole host of questions and, you know, some really important considerations about technology, ethics, and our digital lives. It's a bit like trying to understand the intricate biological correlates of extinction risk in resident Philippine birds, where many factors play a part, or perhaps the complex functional and cultural diversity associated with birds in an urban setting; there's a lot going on under the surface.

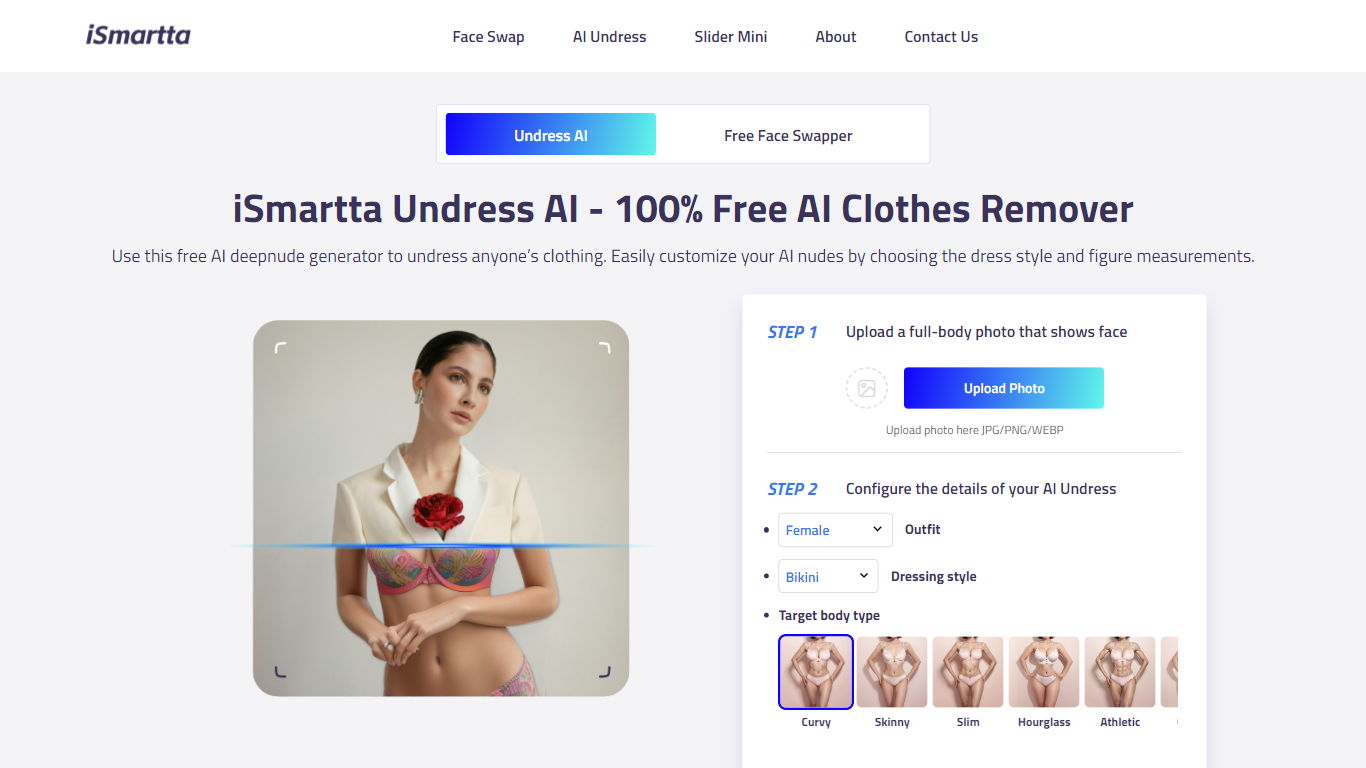

So, what exactly is this all about? At its core, we're talking about artificial intelligence, specifically its ability to manipulate or generate images. When people mention an "undress AI remover tool," they're often referring to a hypothetical or actual software that might claim to either "undress" someone in an image using AI, or perhaps, conversely, "remove" AI-generated "undress" content. The "free GitHub" part points to the open-source community, where developers share code freely, which, in some respects, is a very powerful way for innovation to happen. It's a fascinating, yet quite sensitive, area to explore, and we really need to approach it with care.

This article aims to shed some light on this complex subject. We'll explore the underlying technology, talk about the significant ethical implications, and also look at the role platforms like GitHub play. Our goal here is to provide a clear, balanced view, helping you understand the discussions around this topic and, in a way, think critically about the digital tools we encounter every day. It's a bit like trying to understand articles faster or figuring out how to request reprints; you want clear information without too much fuss, and we're here to help with that.

- Wilson Lake Cabins

- Viagogo Billie Eilish

- Harry Van Gorkum Wife

- Jodi And Travis Dateline

- Is Tom Holland The Actor Catholic

Table of Contents

- The Digital Frontier: Exploring AI Image Manipulation

- The Quest for a "Remover Tool": Technical Hurdles

- Ethical Imperatives and Legal Realities

- Navigating the Open-Source Community: Responsibility and Oversight

- The Broader Picture: Responsible AI Development

- Frequently Asked Questions About AI Image Tools

- Conclusion

The Digital Frontier: Exploring AI Image Manipulation

Artificial intelligence has truly changed how we interact with digital images. For instance, it's pretty amazing how AI can identify animal taxa using environmental DNA (eDNA) analyses, offering new insight into the presence of mesopelagic creatures. Similarly, AI can now create incredibly realistic pictures, modify existing ones, or even generate entirely new scenes from just a few words. This capability comes from something called generative AI models, which are trained on vast amounts of data. So, they learn patterns and styles, allowing them to produce very convincing visual content. It's a technology that, in a way, is still very much developing, and its possibilities just keep growing.

What AI Can Do

When we talk about AI's ability to create or change images, we're looking at sophisticated algorithms. These algorithms can, for example, fill in missing parts of a picture, change someone's expression, or even swap faces. They can also take a normal photograph and, well, transform it in ways that were once only possible with advanced graphic design skills and a lot of time. This is why you see so many cool AI art generators or tools that can make old photos look new. It's a powerful capability, and it's quite accessible now, too, which is interesting.

The Idea of "Undressing" with AI

The concept of an "undress AI" tool refers to software that uses these generative AI capabilities to remove clothing from an image, creating a non-consensual intimate image. This is done by the AI essentially "imagining" what would be underneath based on its training data. It's a highly controversial and problematic application of AI, and it raises serious ethical red flags. The very idea of it, you know, goes against fundamental principles of privacy and respect. Such tools, if they exist, often circulate in less regulated parts of the internet, and their existence is a stark reminder of the potential for technology to be used for harmful purposes, even if the underlying technology itself is neutral.

- Mating Horse Porn

- Recargar Monedas En Tiktok

- What Caused Lisa Maries Death

- Ivanka Trump Wedding

- Brett Thorson Nfl Draft

The Quest for a "Remover Tool": Technical Hurdles

Given the existence of AI tools that can generate or modify images in problematic ways, it's natural to wonder if there's a "remover" tool that can reverse these changes or detect them. This is a bit like the challenges faced when trying to configure Eureka client in a new Spring Boot application, where you might encounter errors like "Cannot execute request on any known server." There are complex technical hurdles involved. Just as exclusion barriers are increasingly used as a management strategy to control the spread of aquatic invasive species (AIS), the digital world needs its own forms of barriers and detection, but these are often very difficult to implement effectively.

Reversing AI: A Complex Challenge

The truth is, reversing AI-generated alterations, especially something like an "undress" effect, is incredibly difficult, almost virtually impossible in a truly restorative way. When an AI generates an image, it's not simply overlaying something that can be peeled back. It's creating new pixels and new information where none existed before. It's like trying to put toothpaste back in the tube, you know? The original data is often gone or fundamentally changed. Detecting such images is also a challenge, as AI models are constantly evolving, making it hard for detection systems to keep up. This means that once such an image is created and shared, the damage, sadly, is often done.

Open-Source Landscape and GitHub

GitHub is a widely used platform where developers collaborate on open-source projects. Many incredibly beneficial tools and technologies have come from GitHub, like the foundational elements of various cloud services such as Amazon's EC2 and AWS, Apache Hadoop, or Microsoft Azure. It's a place for innovation, learning, and sharing. However, because it's open, it also means that projects of all kinds can be hosted there, including those that might be controversial or potentially harmful. The term "free GitHub" implies that such a tool would be openly available, without cost, for anyone to download and use. This accessibility, while generally a positive aspect of open source, also means that tools with problematic applications can spread quickly, and that's a serious concern, in a way.

Ethical Imperatives and Legal Realities

The discussion around "undress AI remover tools" quickly moves from technical capabilities to profound ethical and legal questions. This isn't just about code; it's about people, their privacy, and their safety. It's like the ethical considerations around environmental DNA analyses; while they promise efficient biodiversity characterization, there are always questions about how such powerful tools are used and for what purpose. Honestly, this part of the conversation is the most important, I mean, it really is.

Digital Consent and Privacy: Cornerstones of Respect

At the heart of the issue is digital consent. Creating or sharing intimate images of someone without their explicit permission is a severe violation of their privacy and a profound breach of trust. This applies whether the image is real or AI-generated. Every person has a right to control their own image and how it's used. The proliferation of tools that can generate non-consensual intimate imagery undermines this fundamental right. It's pretty much a basic principle, you know, that we should all respect each other's boundaries, especially online. We really need to remember that.

The Harmful Impact of Misuse

The misuse of AI for creating non-consensual intimate imagery can cause immense harm to the victims. The psychological distress, reputational damage, and emotional toll can be devastating and long-lasting. It's a form of digital assault that can ruin lives. This is not a harmless prank or a minor inconvenience; it's a serious act of violation. We need to acknowledge the very real human cost behind these technological capabilities, and that's something that, honestly, often gets overlooked in the excitement of new tech. It's pretty much a serious problem, and we need to treat it that way.

Legal Consequences and Regulations

Many countries and regions have laws against the creation and distribution of non-consensual intimate imagery, often referred to as "revenge porn" laws. These laws typically extend to AI-generated content as well. Violators can face significant legal penalties, including fines and imprisonment. Platforms like GitHub also have terms of service that prohibit the hosting of illegal or harmful content. So, if a project on GitHub is found to facilitate such activities, it can be removed, and the account responsible might be banned. It's important to know that there are legal repercussions for these actions, and that's a good thing, in a way, for everyone's safety.

Navigating the Open-Source Community: Responsibility and Oversight

The open-source community thrives on collaboration and the free exchange of ideas, which is, you know, generally a wonderful thing. However, with this freedom comes a significant responsibility, especially when it comes to tools that could be misused. It's a bit like managing the spread of aquatic invasive species; while exclusion barriers can be effective, they also need careful consideration of their impact on native organisms. Similarly, open-source platforms need to manage the potential negative impacts of certain projects.

Balancing Innovation and Safety

Developers and platform providers face a delicate balance: fostering innovation while ensuring safety and preventing harm. This means having clear guidelines for what is acceptable and what is not. For instance, just as we explore the utility of eDNA for identifying animal taxa, we also need to explore the utility of AI responsibly. It's not about stifling creativity, but about directing it towards positive applications and away from those that violate privacy or cause distress. It's a continuous conversation, honestly, and one that requires a lot of thought from everyone involved, and stuff.

Community Guidelines and Enforcement

Platforms like GitHub have community guidelines that prohibit harmful content, including content that promotes or facilitates illegal activities. Users can report projects that violate these rules. The platform then reviews these reports and takes appropriate action, which can include removing the repository or suspending user accounts. This enforcement is crucial for maintaining a safe and responsible environment within the open-source community. It's a bit like how various cloud services need robust security protocols; the underlying infrastructure needs protection, and so do the communities built on these platforms. You know, it's pretty important.

The Broader Picture: Responsible AI Development

The discussions around "undress AI remover tools" really highlight a much broader point: the need for responsible AI development. It's not just about one specific tool; it's about the entire ecosystem of AI and how we, as a society, choose to develop and use these powerful technologies. It's a bit like reviewing conceptual frameworks that characterize the role of learning in movement; we need to understand the foundational principles that guide AI's development and use. Basically, we need to build AI that serves humanity, not harms it.

Building AI for Good

AI has incredible potential to solve complex problems, from identifying animal taxa to analyzing genomic pathways for cold response. It can help us understand our world better, improve healthcare, and create new forms of art and entertainment. The focus of AI development should always be on these positive applications. Developers and researchers have a responsibility to consider the ethical implications of their work and to prioritize the well-being of individuals and society. It's about building tools that uplift, rather than degrade, and that's, you know, a very important distinction.

Educating for a Safer Digital Space

Beyond responsible development, there's also a strong need for public education. Users need to be aware of the capabilities of AI, both positive and negative. They need to understand the risks associated with certain types of content and how to protect their privacy online. This includes knowing how to identify manipulated images and understanding the importance of digital consent. It's about empowering everyone to navigate the digital world safely and critically, much like understanding the feasibility and limits of tree planting programs for solving environmental issues; knowledge is key to making good decisions. We really need to get this message out there, honestly.

Frequently Asked Questions About AI Image Tools

People often have questions about AI image tools, especially when sensitive topics come up. Here are a few common ones, sort of, that often get asked:

Is an "undress AI remover tool" legal?

The creation and distribution of non-consensual intimate imagery, whether real or AI-generated, is illegal in many places around the world. So, a tool designed to create such content would likely be considered illegal. As for a "remover" tool, its legality would depend on its specific function and how it's used, but the primary focus should be on preventing the creation of harmful content in the first place, you know.

How can I protect myself from AI image manipulation?

Protecting yourself involves being very careful about what images you share online and with whom. Always be mindful of your privacy settings on social media platforms. Also, be critical of images you see online, especially if they seem suspicious or too good to be true. Sometimes, simply being aware that such manipulation is possible is a powerful first step, and that's pretty important.

What should I do if I find non-consensual AI-generated imagery of myself or someone I know?

If you encounter non-consensual intimate imagery, whether real or AI-generated, it's really important to take action. You should report it to the platform where you found it. You might also consider contacting law enforcement, as this is often a criminal offense. There are also organizations that provide support to victims of online harassment and image abuse, and they can offer guidance, too, which is very helpful.

Conclusion

The conversation around "undress AI remover tool free GitHub" highlights the ongoing tension between technological innovation and ethical responsibility. While open-source platforms like GitHub are vital for progress, they also carry the challenge of managing content that can be misused. It's clear that the creation of non-consensual intimate imagery, regardless of the technology used, is harmful, unethical, and often illegal. We really need to emphasize this point, you know.

Moving forward, it's essential for developers to build AI with strong ethical frameworks, for platforms to enforce clear guidelines, and for users to be informed and responsible. Our collective commitment to digital consent, privacy, and safety will shape the future of AI. We can learn more about responsible AI development on our site, and also find resources on our AI safety guidelines. By working together, we can try to ensure that AI serves humanity in positive and respectful ways. It's a big task, but it's one we, honestly, must take on.

- Can Oil Protect Hair From Heat

- Jonathan Galindo Who Is Jonathan Galindo Internet Meme

- What Happened To Eve Nichol Polly Klaas Mother

- Kendra G

- Ruth Buzzi Net Worth

Undress AI Tool - Free Undress AI Create Deepnude: AI Tool Reviews

Undress AI Clothes Remover vs iSmartta Comparison of AI tools

A Guide of How to Use the Undress AI tool